What is AGI-hard

At this point, knowing what AI can't do is more useful than knowing what it can

The late Larry Tesler is often quoted as saying that

“Artificial intelligence is whatever hasn’t been done yet.”

There’s a very real problem in writing about AI, in that it actually does not have a precise, commonly accepted definition. The scope of AI is essentially unbounded, going from “A lot of Ifs” to transcription to translation to computer vision to prediction, to the currently hyped generative use cases, to fictional sentient beings both digital and physical.

This makes serious debate about productizing AI difficult, because when you can just handwave at anything and say “with enough data this will get solved”1, it is hard to distinguish talking head/futurist/alarmist bullshit from the dreams of serious builders who are making unimaginable, but real, advances.

Brandolini’s law guarantees that there will be more of the former than the latter, yet this problem of distinguishing the “adjacent possible” from the “outright science fiction” is the most immediate need of investors and founders.

This was my inherent discomfort with rejecting/considering out of hand the responses to my AI Red Wedding piece, because I would be forced to articulate the reasons why I think paralegal work is more vulnerable than infographics, or why programmers have less to worry from Codex than transcribers have to worry about Whisper2. Elon is repeatedly wrong about full self driving, and now Emad is saying visual presentations like Powerpoint will be “solved in the next couple of years”.

What are the driving factors behind the “AI vulnerability factor” of each industry? If we were forced to choose, is it more profitable/possible to replace the low skilled or augment the high skilled?

I think this is the sort of debate that makes good podcast fodder but ultimately the sheer diversity of human industry means that it is very hard to do top down analysis. Who would have guessed that the first casualty of Stable Diffusion would not be Stock Photography companies, who are simply building it into their offerings, and instead the 3D virtual staging industry? Even for those with existing “AI enabled” solutions, the future is here but not evenly distributed, as this reply about Brex/Ramp demonstrates.

The NP-hard analogy

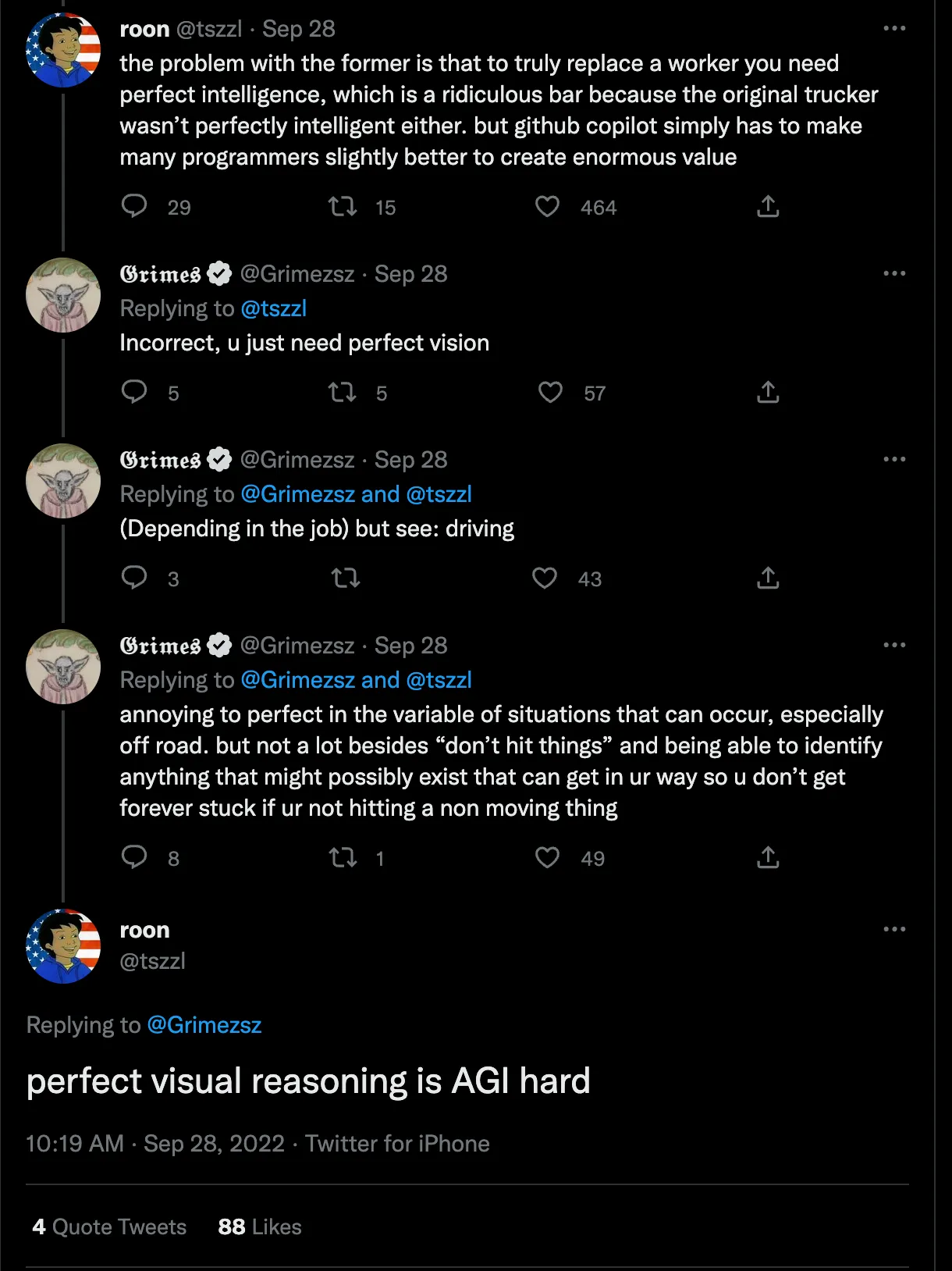

The solution to stopping the debate comes (here is the most 2022 sentence I have ever typed) in an exchange between roon and Grimes (yes, Elon’s partner Grimes) on self driving:

Grimes makes a classic tech product error in casually waving away a problem with “just”, and roon calls her out on it by pointing out that “perfect visual reasoning is AGI hard”.

This is a useful “stop sequence” for AI handwaving. Software engineers and computer scientists already have a tool for understanding the limits of infinite spaces - the P vs NP problem - as well as an accepted wisdom that there is a known area of algorithm research that is too unrealistic to be productive, because working on it would be functionally equivalent to proving P = NP.

I’ll spare you further layman elaboration that you can Google, but the implications of declaring a problem space “NP-hard” (there is also “NP-complete”, which has a distinct definition, but we will ignore) is something we can use in productized AI conversations:

Finality: “You can stop here” is very useful for endlessly expansive debates

Equivalence: “If this is true, then X will also be true, do I believe that?” is very useful for thinking through predictions

Similarly, as far as we know, we don’t have AGI yet, and so the class of problems that are “AGI hard” should be assumed impossible/not worth working on without also having a plan for solving AGI.

In other words, you cannot “just” assume that there will be a solution to AGI-hard problems. All conversations about products requiring AGI-hard solutions are effectively science fiction until we actually have AGI (which we truly don’t know if will arrive in 10 years, or 100, or 1000, or never).

Empathy and Theory of Mind

When I asked friends a good yardstick for “AGI-hard” would be, the universal first response was some form of “Theory of Mind”. A simpler version of this might be called empathy, or being able to form a thesis about you, gauge your interests and capabilities, and act accordingly.

This is either a level of difficulty beyond a classic Turing test, or perhaps the ultimate essence of one, since empathy is at the heart of humanity.

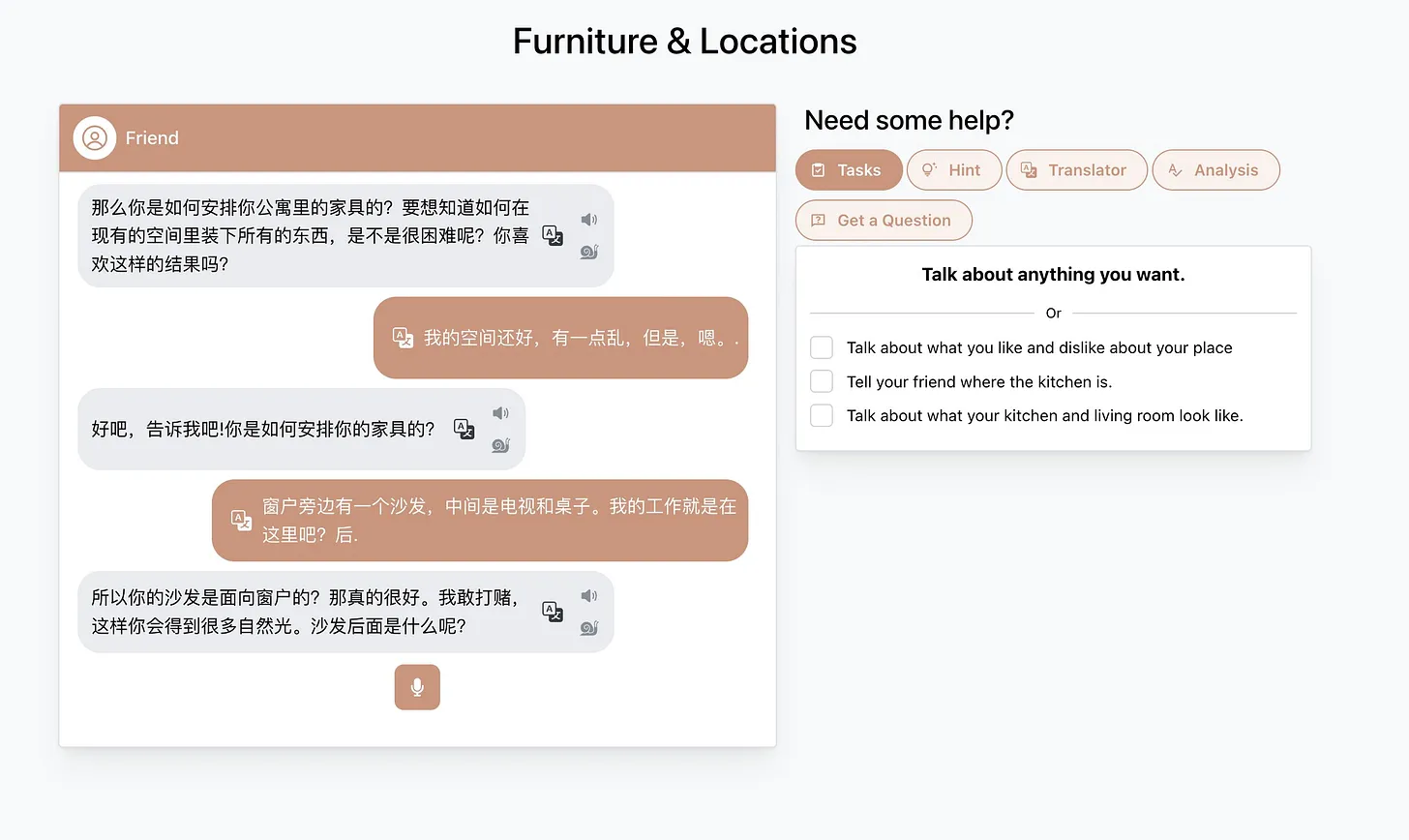

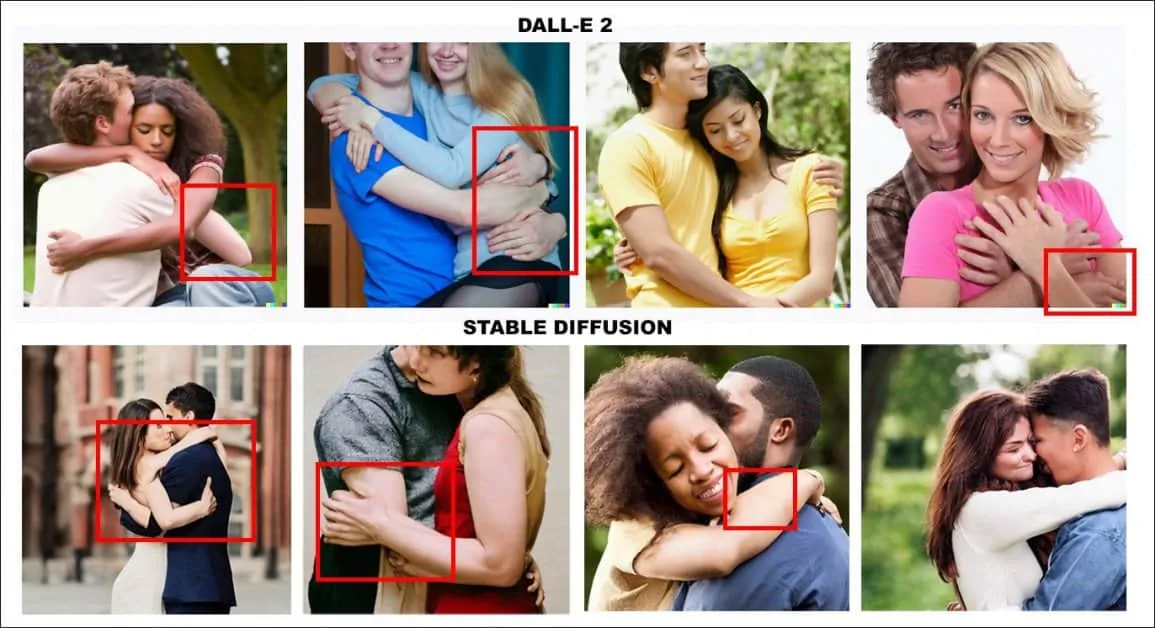

I felt this when trying out Quazel, which is a language training app combining OpenAI Whisper + GPT3 recently launched on Hacker News.

Translated:

Impressive (if not actually colloquial), but my Chinese is rusty and halting, and a reasonable Chinese tutor would have immediately detected this and lowered their own communication level to put me at ease. Instead, Quazel barrelled on, in an admittedly impressive conversational style, understandable but enough that it might be intimidating.

This intimidation factor is key. The goal for AI applications is not “replicate humans as closely as possible”. We don’t really care about that, and in fact, we want AI to be superhuman as long as it is aligned. But we care that it fills the job to be done, which in this case was “help me learn Chinese”. The chatty second question here might spur me to improve my Chinese, but it might cause someone else to hide in their shell. It would take empathy for an AI language tutor to determine what kind of learner I am, and adjust accordingly. We can all probably tell that GPT-3 does not have this capability, and, if theory-of-mind is AGI-hard, then we should also conclude that a level-adjusting AI language tutor product is not viable to work on (a shame, given this also means Bloom’s 2 sigma problem is AGI-hard).

We could approximate empathy; just in October 2022 alone we’ve seen papers on Single-Life Reinforcement Learning and In-context Reinforcement Learning, and CarperAI launched trlX, a way to fine-tune language models with Reinforcement Learning via Human Feedback. We could also lower the promises of our products - dull scissors aren’t perfect scissors but still better than no scissors. But the AGI-hard barrier is a clear line in the sand.

The illusion or belief that our counterpart exists and understands us is also important to us - this is why AI therapists might help some folks, but an AI therapist capable of performing at or above human ability would also be AGI-hard.

Composition and Meaning

F. Scott Fitzgerald is often quoted: "The test of a first-rate intelligence is the ability to hold two opposing ideas in mind at the same time and still retain the ability to function."

This is too high a bar; many humans easily fail this test.

But yet we instinctively know that the ability to speak does not make you intelligent, and the ability for generative AI to create beautiful images like this does not make it an artist that can create this:

Visually interesting, but with a message. The AIs of today would perhaps describe this as “crowd of people looking at 2 paintings of buxom women AI art being auctioned by a robot, while a human artist girl struggles to sell a human painting for $1, watercolor”3. A human who took one look and then closed their eyes could describe the meaning of the art, and be more precise, than what specifically is in it.

Physical and Conceptual Intuition

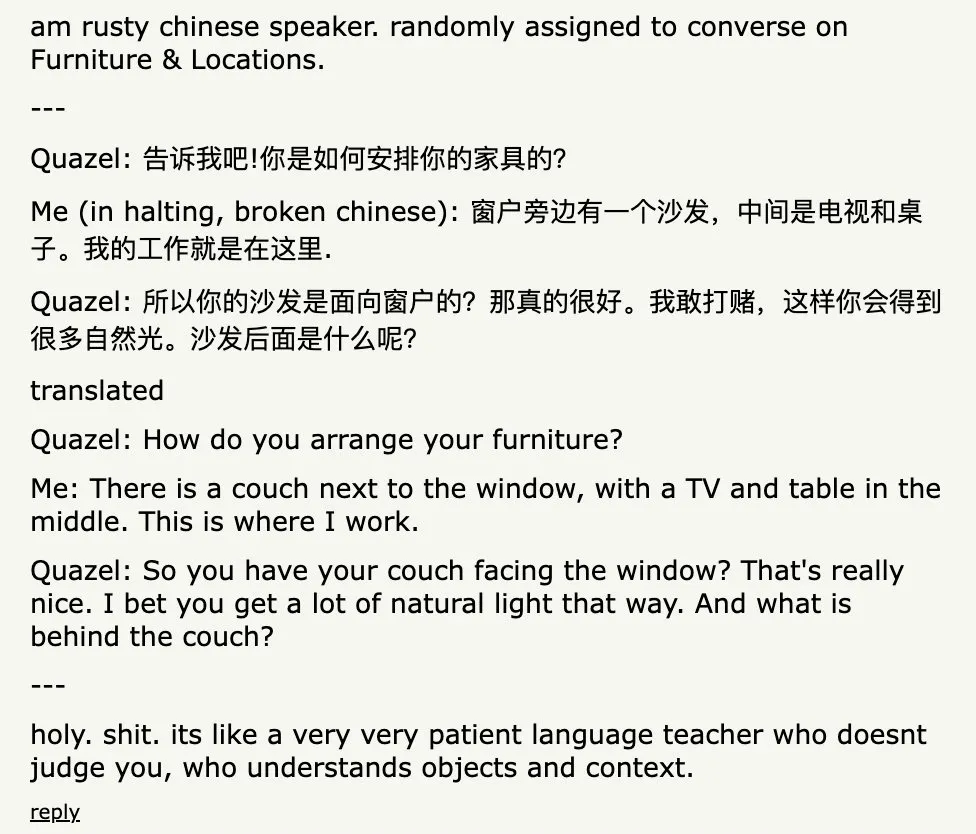

The joy and problem with latent space being infinitely larger than our real world is it is constrained by nasty inconveniences like physics and economics. A baby is born with a great deal of genetically imprinted transfer learning; time flows in one direction, sound (usually) does not have color, smiles = happiness. A little bit of time in our world and they would learn that the vast majority of humans have two hands, yet our AI art portals happily transport us to worlds where those limits don’t apply.

The simple answer “get a physics engine and some human models and generate a few billion more training samples for embeddings” will probably fix this eventually, but that is a hotfix over an endless whackamole of complexity arising from the fact that AI learns from data about the world, yet does not actually live in the world it models (Yann LeCun actually calls these world models and notes we are far from able to do this). “The map is not the territory”, and we’ve only got very good map makers and navigators with AI.

Humanity is Robin Williams, and AI is Matt Damon. The map is not the territory.

The map will only equal the territory when we can fully simulate our world for AI to train in, which means the AGI-hardness problem is also a proxy for the simulation hypothesis. If we can solve simulation, we’ll solve AGI-hard physical modeling problems, but it is less evident that AGI-hard problems are also simulation-hard.

As you may have experienced with the Joe Rogan-Steve Jobs AI podcast, simulation-hard problems are likely also uploading-hard and replica-hard.

The physical intuition problem translates at the limit to conceptual intuition. GPT3 can understand some analogies and to think step by step, but we can’t trust it to reliably infer concepts because it doesn’t have any real understanding of logic. Ironic, given machines are built atop logic gates. So:

Math: Can AI invent calculus from first principles?

Physics: Can AI do Einsteinian thought experiments and derive relativity?

Finance: Can AI look at option payoffs and know to borrow from the heat equation to create Black-Scholes?

Music: Can AI have taste? Set trends? Make remixes and interpolations? How many infinite monkeys does it take an AI to come up with “you made a rebel of a careless man’s careful daughter”, and how many more to understand how special that line is?

Programming: Can AI create AI? Quine LLMs?

These physical and conceptual intuition-type problems are likely to be AGI-hard.

But we’ve derived some useful equivalencies at least: AGI-hard = Simulation-hard = Uploading-hard = Replica-hard.

Adjacent Impossible

Perhaps AGI-hard is too hard - it’s tiring and depressing (or perhaps reassuring? depending on your view) to keep coming up with ways computers will never be good enough.

But it’s also useful to keep with you a list of “things AI is totally capable of, but just can’t do yet”, just so you can test when you’re dealing with an AI. Using AI (the Adjacent Impossible) to combat AI, if you will.

This is a moving target; the original CAPTCHA was originally a perfectly good Turing test, but had to be retired when AI solved it better than humans. Not perfect; but useful.

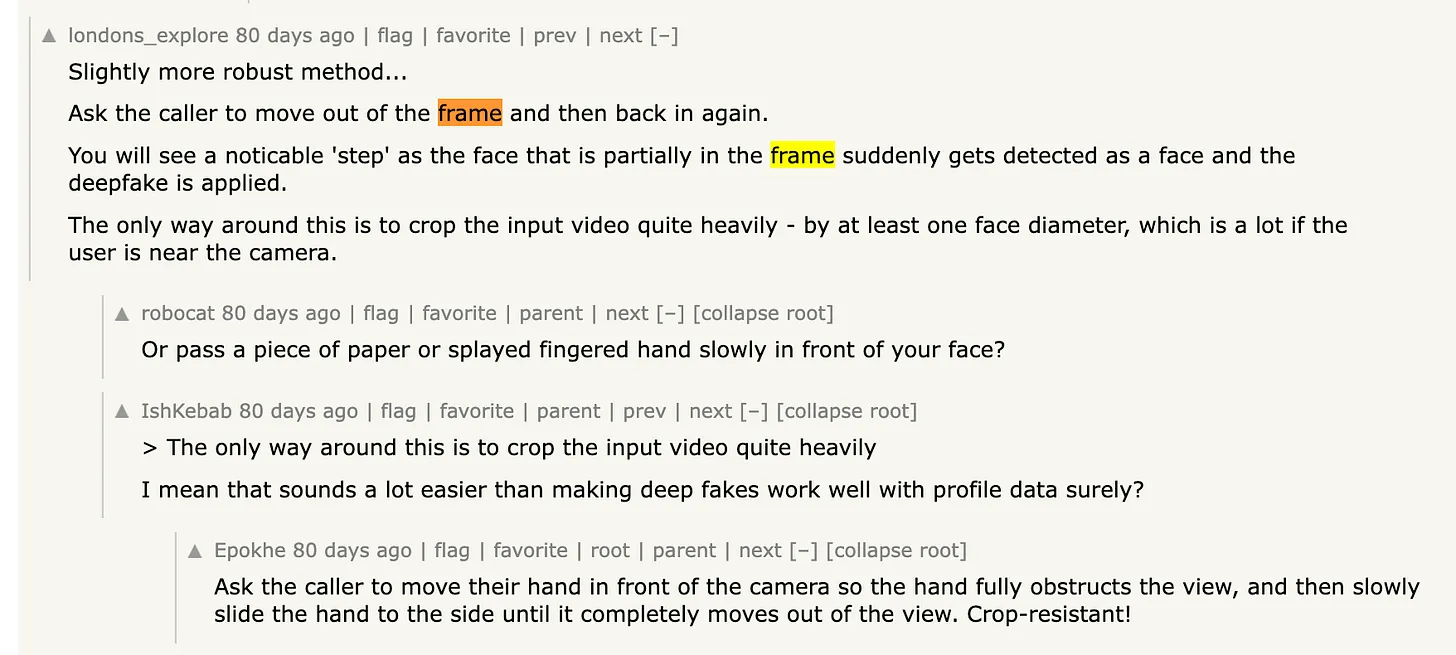

My favorite recent example is detecting deepfakes by asking the caller to turn sideways:

Of course, this is just a question of lack of data, but it is pretty obvious that we don’t have anywhere the same level of data of face profiles at different angles compared to point-blank face photos.

In particular, NeRFs are making it much easier to create and navigate virtual space:

Someday, though, that trick will fall to the ever-progressing AI. Then you have other options:

Postscripts

Lex Fridman asks a question of Andrej Karpathy, and he reframes the question before answering. “I wonder if a language model can do that”

Melanie Mitchell of the Santa Fe Institute has also published a paper on Why AI is Harder than We Think, covering 4 fallacies:

Fallacy 1: Narrow intelligence is on a continuum with general intelligence

Fallacy 2: Easy things are easy and hard things are hard

Fallacy 3: The lure of wishful mnemonics

Fallacy 4: Intelligence is all in the brain

Interview here

Join the Discussion

If you enjoyed this post, I’d appreciate a reshare on your hellsite of choice :)

Double Descent, aka “yes it looks like there’s a limit, but seriously, just add more data” is the most nihilistic finding in AI.

While we understand that AI can turn comments to code, it is another unreachable level to turn JIRA tickets to code. The latter feels like it is AGI-hard: but why?

CLIP Interrogator says “a painting of a crowd of people standing around, a detailed painting, featured on pixiv, context art, selling his wares, short blue haired woman, sona is a slender, disgaea, wearing pants, under bridge, commercially ready, furraffinity, displayed in a museum, psp, anthropomorphic _ humanoid”.

Subscribe to L-Space Diaries

Productized AI/ML, Software 3.0, and other notes from Latent Space

Great read!