(This is part 3 of a series on an AI generated TV show. Click here to check out part 2.)

We’ve covered a lot of ground – from high level concept to a finished script. But a script isn’t very compelling – we need to film it! The video production process mimics Hollywood productions, because it operates under similar constraints:

The AI generates the dialog and high level instructions for music, camera angles, and so on. This is given to a “production crew” made up of a visualizer program (written by me), and a human (me) who generates additional sets, characters, sounds, etc. if needed. The visualizer “films” the episode and produces a final video.

Fundamentally, the production system is responsible for generating the 150 or so billion pixels that make up a 15 minute video. It makes the moment to moment decisions about lighting, camera angles, set design, costumes, voice “casting”, sound, music, and special effects to execute the director’s vision. And it combines all this into a coherent whole.

Let’s go through the key pieces of the production system.

The Backlot

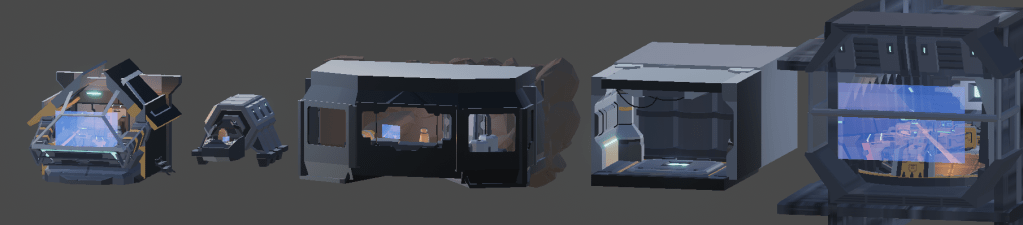

Below are a few major sets for the show, all in a neat row. It’s easy to imagine them made out of plywood on a soundstage, isn’t it? During filming, they feel like real places. But if you step back, you see just how little “reality” there is:

Of course, I am standing on the shoulders of giants. Check out the filming set for the Millenium Falcon cockpit below. Only a tiny portion of the ship was built for filming. If it was good enough for Lucas, it’s good enough for me!

In some versions of On Screen, I set up a large open world for filming. But getting good results became more difficult, not less. The AI was confused by the wide range of choices in these large areas, and it created nonsensical and confusing scenes. Because there is a lot more information for a big world, it required additional systems to keep the LLM from being overwhelmed, too.

Smaller sets, focused on specific story purposes, are much easier for the AI to use effectively.

Actors are defined by their names, costumes, and voice. I made a tool (below) to modify names, the base geometry, accessories, and to change voice settings. The tool made building out the cast go quickly. And a single “Actor” which could configure itself to “wear” any costume greatly simplifies the implementation.

Actors use a mix of canned and procedural animations. An animation state machine sequences individual animations for smooth motion when an actor goes from sitting to standing, typing to not typing, and so on.

A gaze system tracks interesting things in the vicinity of each actor, and generates the head motion to look at them. When actors are speaking, a wagging motion is applied to their head. The wagging is stupid simple – the louder their audio is at a specific moment, the more their head moves. But it’s effective!

Early versions of the gaze system didn’t know if points of interest were in the same set as the actor. An actor speaking in a far away set caused other actors to look in seemingly random directions as they turned their head towards it. Once the system understood what region went with what set (the green box below), it became possible to ignore distant actors and get sensible behavior.

All of these behaviors, as well as pathfinding, are coordinated by an overall behavioral state machine.

Sets are built by kitbashing (ie, smashing together lots of small pieces from other models). The current sets came together pretty quickly, in a few hours of focused work.

Other ingredients are necessary, though:

- Markers for where actors can sit/stand. These appear as yellow balls with names above them.

- The holoscreen. Although invisible most of the time, one screen per set is always present. These greatly increase dramatic possibilities without adding a lot of complexity for the AI.

- The bounds of the set. This is the green wireframe box. Sometimes camera selection, holoscreen operation, or gaze need to be limited to the same set.

- Markers for camera placement.

So far, the AI only uses some of these features. Making all the features work reliably is a challenge, both in terms of the raw functionality and in teaching the AI to use them. The biggest of these work in progress features is allowing actors walking around the set. Unfortunately, the AI loves moving actors around, and often has them sprint from place to place, or even leave the set, between lines!

Lighting makes a huge difference in quality, depth, and legibility of a scene. Above left is a scene with a half dozen small hand tuned lights, while above right is the same scene with default lighting.

The lighting is very simple: the background is darker, with cool, blue colors, and the foreground is brighter, with warm, orange colors. Lights are placed to illuminate the character from the side, so that his face has clear, defined features. Notice that the crease in his mask is plainly visible in the left hand version, giving a sense of depth. In the right hand, unlit version, there is no definition and his face looks flat.

Exterior shots are done just like they did them in the old days of film. A miniature version of the ship is used, as shown below. There are actually many different invisible ships in the same location, and we only show one at a time.